n. A citation that combines a textual reference with a durable hyperlink to that exact passage in a preserved copy of the source.

v. (–cited, –citing) To create a citation by joining a selected text passage to a permanent, pinpoint hyperlink of its archived source.

Before going into the mechanics, see it in action:

Here’s the deepcite I created there—try the link for yourself:

The Twin Flaws of a Standard Hyperlink

The architecture of the web is fundamentally at odds with the demands of lasting citation. Any link we use as a reference is undermined by two distinct problems: one of permanence, the other of precision.

The permanence problem—link rot—is well-known. A normal hyperlink is a fragile, hopeful pointer to a resource you don't control. Pages change, URL schemes evolve, and critical information simply disappears. The Internet Archive has been fighting this battle for decades.

The precision problem is more subtle, a chronic friction we’ve just grudgingly accepted. A link to a 10,000-word article isn’t a citation; it’s a research assignment you’ve hoisted upon your reader. In effect they are asked to (a) take you at your word, (b) try to guess the right keywords for a ⌘ F search with whatever contextual clues you’ve provided, or (c) just resign themselves to reading the document in full.

The web has an emerging tool for the precision problem in the text fragment URL. By appending #:~:text=... to the end of a link, you can direct nearly every modern web browser to scroll to and highlight a specific passage on that page. At first blush, this recent W3C standard seems incredibly useful. Provide a colleague the precise pincite to one consequential fact you spot on line 2416 of a dense environmental report. Or point your future self back to a key insight toward the end of an obscure scientific study that took you multiple reads to appreciate its significance. This looks promising...

But on its own, this technology only exacerbates the permanence problem. It creates a citation so specific that a single punctuation fix on the live page will break it—a phenomenon one might aptly call “fragment rot.”

A truly useful web citation needs to solve both problems at once: it must point precisely to the relevant portion of a cited source, and it must do it enduringly. For that to happen, the source itself must be frozen in time, exactly as it appeared when the citation was made.

Calling All Archivists

Inspired by my earlier work on a similar script for DEVONthink (which also has significant new features and improvements as I'll detail in a follow up post), I saw the potential a text fragment tool could have. I enlisted my trusty AI coding assistant, and within five minutes, I had a working proof of concept. But my initial success was short-lived. I experienced fragment rot firsthand after just a few uses, and then again a few days later. The theoretical risk I’d anticipated was a practical, frequent reality.

This technical frustration soon collided with a much larger concern. Immediately after the presidential inauguration on January 20, 2025, information began disappearing from federal government websites—first sporadically, very soon systematically. Databases and other resources conservationists have long relied on from agencies like the EPA and NOAA, among others, abruptly went dark.

As a public interest environmental lawyer, my work is built on this data. My cases under the Endangered Species Act (ESA), Clean Water Act (CWA), and National Environmental Policy Act (NEPA—RIP) depend on a stable, verifiable administrative record. This wasn’t just another vaguely-menacing news item portending yet more symbolic violence on the Rule of Law. It represented (and still represents) a clear, concrete, and immediate threat to my clients' interests and to the science-based, mission-driven advocacy my colleagues and I have built our careers on.

I had already explored the world of self-hosting enough to have come across ArchiveBox, an open-source tool that creates high-fidelity, personal archives of web content. Its recent beta API made it the perfect engine. But ArchiveBox alone wasn’t sufficient. The URL for each archived snapshot includes a timestamp with microsecond accuracy, making it impossible to predict from the client-side. I needed a custom bridge to sit between my browser and my archive.

The Web Deepcite Tool

My solution is composed of two parts that work together: a script that runs in your browser, and an optional backend you can host yourself.

1. Browser Deepciter (client)

The heart of the system is a single JavaScript file. I run it in Orion Browser using its excellent Programmable Button functionality with a keyboard shortcut, but it works just as well as a standard bookmarklet in any other modern browser.

When you select text on a page and run the script as-is, it assembles and stores in your system clipboard a deepcite formatted in rich text looking like this:

note: the cite is hyperlinked with text fragments to the original:

https://example.com/#:~:text=This%20domain%20is ...When you configure the script by pointing PROXY_BASE_URL to your self-hosted backend, and specifying URL_PREFIX to match your backend’s configuration, it creates a deepcite that looks the same, except that the citation's hyperlink points to the archived webpage.

2. Self-Hosted Backend (server)

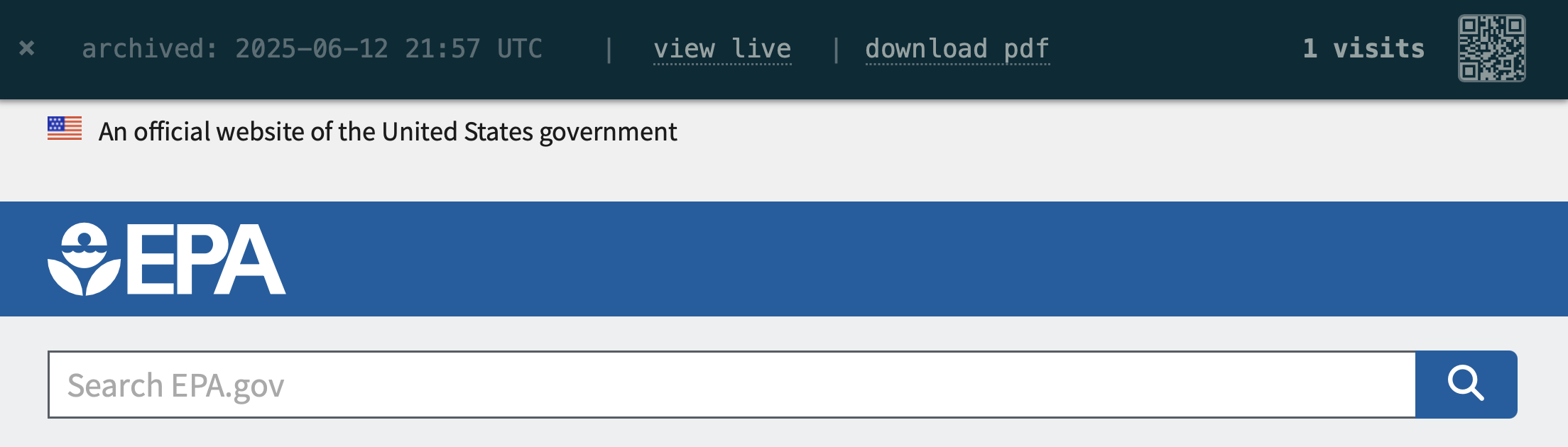

The backend pairs a standard ArchiveBox instance with a FastAPI server that I wrote to act as a smart proxy with basic URL shortening / analytics functionality built in. When you create a deepcite, the backend tells ArchiveBox to save a 100% self-contained archive of the page using Singlefile.

When the link is visited, the proxy serves that file after injecting a minimalist banner at the top to indicate:

- archival date;

- any delay between when the citation was made and when the page was archived (this can happen if ArchiveBox had a long job queue or was unresponsive);

- link to archived PDF of page;

- link to original / live page; and

- QR code for archival URL.

Try First On My Demo Server

Setting up a self-hosted server can be a project. To help decide if this is a workflow you'd find useful, you can point the client script to my public demo instance. To do this, configure the variable at the top of the JavaScript file:

PROXY_BASE_URL = 'https://cit.is'Getting Your Own Setup Running

If you're as excited about this as I am, and want your very own permanent private archival deepciter, head over to my open source code repository to get started:

The README.md file in that repository provides the canonical step-by-step instructions. The setup process should be familiar to anyone who has dabbled in self-hosting. You will use a standard .env file to configure the ArchiveBox Docker container, and a config.yaml file to tell the proxy script where to find your ArchiveBox instance and how to behave. Once configured, you run the services with docker compose and the proxy script via Python.

Next Steps

This toolkit is already a core part of my own workflow, but I am considering several future improvements and welcome feedback. I'm currently mulling adding the Internet Archive as an alternative to Archivebox, finding a creative way to bypass the need for a server script (perhaps by combining Internet Archive with an API call to a link shortening service), integrating deepcite functionality directly into ArchiveBox (i.e. by forking that project), and building browser extensions for a more polished UX than bookmarklets.

The web's citation problems aren't going away—if anything, the recent wave of government data disappearing has made clear how fragile our digital references really are. Deepcite won't solve every corner case, and setting up your own archive does require some technical effort. But for researchers, writers, and lawyers who depend on precise, durable evidence, the investment in a system you control is, I believe, a necessary one.

UPDATES

2025.06.20

I've added support for using SingleFile directly and bypassing ArchiveBox. In my testing so far SingleFile is faster, more reliable, simpler, and uses a lot less space. I.e., a win/win/win/win. SingleFile is therefore now the default mode.

2025.06.22

I'm excited to share that I'm busy building this out as a subscription service at cit.is. Stay tuned for announcements about a public beta soon. Meantime, please note I'm moving the demo deepcites from https://sij.law/cite/ to https://cit.is/ .